Data Center Cooling: What are the top concepts you need to know?

Data center cooling is a growing industry. Data streaming and storage has been a business necessity for some time, even before 2020’s global COVID-19 lockdowns. Now we’ve further increased our reliance on data-driven services to power our work and our lives. As a result, data centers are running hotter than ever—and they need experts to keep them cool.

But what is a data center, anyway? Why are so many companies using them? And most importantly, what’s an HVAC (heating, ventilation, and air conditioning) company got to contribute to the conversation? Don’t worry, you’ll get all these answers (and more)—plus, they’ll be in plain language that won’t need an IT degree to decode!

In this article, we’ll:

- Define what a data center is,

- Outline the main types of data centers,

- Explain why data centers need cooling,

- Outline the main types of data center cooling systems,

- Walk you through how data center cooling works,

- Discuss eco-friendly options, and

- Share four basic features that most successful data centers have in common.

Ultimately, if you’re building a data center, either for your business or to store other businesses’ data—our goal is to help you understand how an HVAC company can help.

Interested in something specific? Jump ahead!

Use the menu below to find what you’re looking for and jump to that point in the article.

What’s a data center?

A data center is a warm, noisy space where you’ll find servers (that is, computers designed to talk to other computers) and network equipment. Data centers are a key ingredient for any business that uses information to meet customers’ needs.

What does a data center do?

Data centers bring together all of a business’ IT operations into a single place. A data center is more than the servers, racks (also called cabinets), and other equipment inside the room. It’s where all the communication happens between the business’ servers and the computers that allow employees to do their jobs, keep data moving, even keep their building’s operations running.

When systems go down and data stops moving, businesses lose productivity and money. Having a data center in place with the right IT team can prevent these losses.

What are the different types of data centers?

A data center typically falls under one of two categories: enterprise or edge.

Enterprise Data Centers

An enterprise data center (also known as a single-tenant data center) is designed to meet a single business’ specific needs.

The business it serves might be itself, or it could be a single client who has huge data storage and processing needs but doesn’t have the ability to run a data center on its own. (Fun fact: if you’ve heard the term “server room” before, it refers to a small-scale, single-location enterprise data center.)

This data center type varies in size and even number of facilities, depending on what the business is looking for. Google, for example, has 23 enterprise facilities worldwide. This is a good practice for any business with multiple offices or locations spread across a large geographic area, since sharing the demand will prevent overload and any resulting delays.

Edge Data Centers

An edge data center is meant to meet several businesses’ needs.

It’s used most often by colocation providers, who serve businesses that either can’t—or don’t want to—run their own data centers. The businesses provide their own equipment, and the colocation company provides the server space. So, a business can rent out either an entire facility or just some rack space.

Both data center types can save money for a business. It just depends on what your business needs. Maybe it makes more sense to run your own data center to control your tech and data coverage. Or maybe you don’t have room in your building for a server room, and you’re looking for predictable monthly maintenance costs through an edge data center. There’s no wrong answer—the important piece is finding the solution to fit your needs.

Why do data centers need cooling?

Stop us if you’ve heard this one: 50 servers walk into a data center… and it gets really, really hot. Data centers use a lot of power (in fact, about 3% of all global electricity) and produce even more heat. Cooling removes extra heat and keeps servers operating at peak efficiency.

The American Society of Heating, Refrigerating and Air Conditioning Engineers (ASHRAE) recommends keeping your data center at a somewhat cool temperature, usually 19 – 21°C (66 – 70°F). The ideal server room temperature is no more than 25°C (77°F). The temperature for server inlets where power enters the devices should be 18 – 27°C (65 – 80°F) with a humidity of 20 – 80%.

The area directly around the servers is much warmer than the rest of the room because of heat transfer. This is especially true if your data center has many servers.

Maintaining a specific data center temperature range can be a challenge. Combined with the data center industry’s rebounding growth after COVID-19, this is why there’s such a demand for data center cooling. Experts predict by 2025, the data center cooling market in the United States will grow by more than 3% and reach a market size of over $3.5 billion.

Why do some data centers use heating, not cooling?

Some businesses prefer to raise their data center temperature, not cool it. Depending on the requirements for all the servers in the data center, it may not be possible to find an optimal server room temperature that works for all of them. And if a data center runs hotter by design, the building’s cooling systems don’t work as hard to carry excess heat outside, reducing the demand on the building’s cooling systems.

Larger data centers tend to prefer this method. They run at the highest recommended temperatures, fully expecting the equipment to fail. It might seem like poor planning at first glance, but some smart people with calculators determined the costs of 24/7 air conditioning versus the occasional costs of maintenance and repair. Guess which one costs more? Yup, the one that sends your energy bill through the roof during a heat wave.

So, for these data centers, it’s actually a better use of their budgets to invest in occasional server maintenance and replacement than it is to consistently maintain a lower temperature.

What kinds of data center cooling systems are available?

Air Cooling

How it works: Older and smaller data centers combine raised floors with a Computer Room Air Conditioner (CRAC) or Computer Room Air Handler (CRAH). When the CRAC or CRAH sends out cold air, the pressure in the space below the raised floor increases, and the cold air travels into the server inlets. The air then carries heat from the server back into the CRAC or CRAH, where it recirculates.

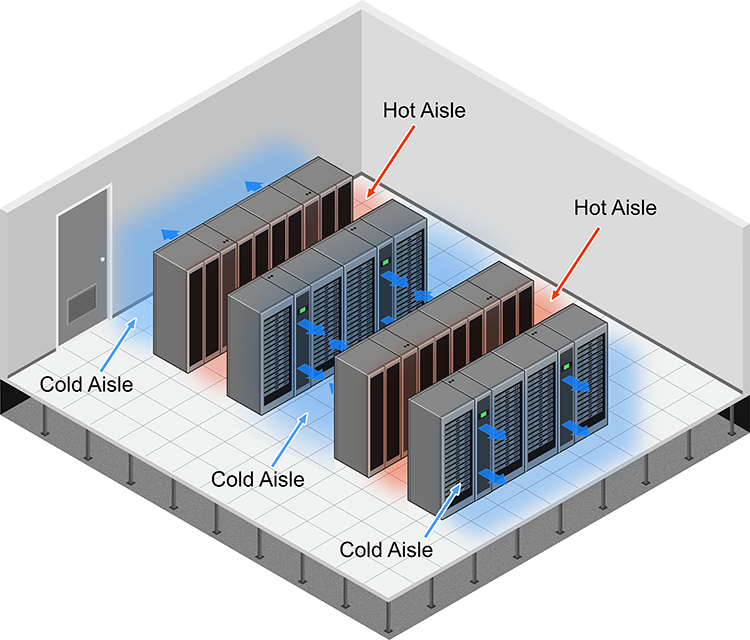

This type of system works best for small data centers with low power requirements. If your data center uses a large amount of power, a single unit can’t take the heat. That’s why newer and larger facilities also use hot and cold aisle setups to separate the cool intake air from the heated exhaust air. By keeping this air from mixing, you can better regulate the overall air temperature in the data center.

What’s the difference between a CRAC and a CRAH?

A CRAC is pretty like the AC unit in your home, actually. It blows air from your space over a coil containing refrigerant, which cools the air and pushes it back out into the space. A CRAH draws in outside air and cools it using cold water instead of refrigerant. It’s more energy efficient than a CRAC since it doesn’t have a compressor, which uses loads of power.

Liquid Cooling

This developing area shows promise for modern data center cooling, since it’s cleaner and more efficient than air cooling. Liquid cooling also means you don’t have to maintain two separate systems, which boosts efficiency.

There are two main types of liquid cooling: full immersion and direct-to-chip. Here’s how they work:

- Full immersion does just what it says: dunking the equipment into dielectric fluid in a closed system. The fluid absorbs heat, turns into vapor, and condenses again, all of which helps cool down the equipment.

- Direct-to-chip cooling is less delicious than it sounds, but still pretty neat. It goes straight to the source by sending coolant to the motherboard. This method depends on your building having a chiller, since the heat needs to travel from the motherboard into your facility’s overall cooling system.

Are there eco-friendly data cooling center options?

Modern data centers use a lot of power and contribute to CO2 emissions. So, they’re looking for ways to either reduce or offset their environmental footprint.

One of the simplest but most effective cooling systems is an air-based process known as free cooling. If you’re located somewhere cold, you can draw pre-chilled air from outdoors into your data center, then send heated exhaust air back outside.

If that’s not an option, you might be better off with a liquid cooling system. This can be especially effective if your facility already has a chiller in place for full-building temperature control. You’ll of course need to find the right balance for your specific needs, to ensure you’re not just trading higher power consumption for higher water use.

That said, most data centers are using air cooling right now. So, while water cooling systems can use less power and be more efficient, it’s also going to be more expensive to get your hands on one.

Some data centers are using smart technology to make themselves more efficient, no matter what cooling system they’re using. For example, if there’s higher demand on their equipment at defined peak hours, they can use their building automation system to adjust the temperature in the server room during those times. In 2017, Google used AI (big surprise) to do the same thing and lowered their energy use by 30%.

What key features do most successful data centers share?

1. Raised Floors

Build a raised floor anywhere between 2 – 48 inches above your facility’s actual floor. Then place the server racks on the raised floor and secure as needed. This creates a convenient space where you can place your HVAC (heating, ventilation, and air conditioning) equipment and run any necessary electrical, although it’s now good practice to run these cables through the ceiling. This space is also a good way to move cold air throughout the facility.

2. Hot and Cold Aisles

Arrange your data center with the server cabinets in a row pattern, which allows you to fit more cabinets into the space. Each row should face the opposite direction of the one next to it. So, the cold intakes and hot air exhausts will face each other, creating alternating aisles with output happening in the hot aisle and input in the cold aisle.

Place air handlers at the end of each aisle. Make sure they’re distributing air properly and not working against each other, which can raise your power bill without a nice cool data center to show for it. You can also add doors and walls to the room to direct air flow and keep the hot and cold air where it’s supposed to be.

The cabinets should be as full as possible without any empty space in between equipment. This prevents temperatures from crossing over. While you’re at it, make sure there aren’t any leaks in the system where cold air can escape, whether it’s in the raised floor, the cabinets, cable openings, or anywhere else.

3. Reliable Power Supply

The whole point of a data center is to prevent interruptions in data flow. And the best way to ensure you’re fulfilling that purpose is to have an uninterrupted power supply, plus a backup generator to keep things up and running if the main power ever goes out. If you’re feeling extra cautious, think about adding another power line for added redundancy.

If you have a big space with even more servers, you’ll want 10 – 20 kW of power per cabinet. Your needs could change over time, so plan for future space and power expansion needs.

4. Smart Investment

At this point, we bet you’re wondering, “that’s great, but how much does a data center cost to build and cool?”

The short answer is—it depends. And planning for this investment, even if you aren’t sure exactly how much it’ll be, is a key part of ensuring your data center is properly cooled and set up for success.

If you’re building an enterprise data center, costs can include any renovations needed to create space or adapt your existing HVAC (heating, ventilation, and air conditioning) system, as well as your IT workers and equipment like servers, cabinets, and cable. If you’re creating an edge data center, add the cost of real estate in your area to your expenses, too. You may also be looking at security expenses to keep your equipment physically safe.

A data center can be a large up-front investment, but it’s also high reward. Your data will be zipping along faster than you can say “high-speed connection,” ideally making your workers more productive and your customers happier.

Summing it all up: Data center cooling basics

To recap, in this article we:

- Defined what a data center is,

- Outlined the main types of data centers,

- Explained why data centers need cooling,

- Outlined the main types of data center cooling systems,

- Walked you through how data center cooling works,

- Discussed eco-friendly options, and

- Shared four basic features that most successful data centers have in common.

We hope this helped you appreciate why data center cooling is a growing industry—and why it’s such a hot topic for HVAC (heating, ventilation, and air conditioning) professionals!

We can’t wait to hear from you!

Want to discuss your specific data center cooling needs? Our HVAC experts are here to help. Contact us or keep scrolling down to the comments section, and we’ll be happy to answer any questions

*Please note: Gateway Mechanical doesn’t endorse any of the companies or products listed or linked to in this article, and we aren’t paid or otherwise compensated for mentioning them.